A while back, when I was working on some changes for FreeBSD, I wanted to checkout our source tree. A very typical thing that every developer does every day, that is

git clone https://git.FreeBSD.org/src.git

However, the FreeBSD git server is pretty far from me. There’s a GeoDNS system in the front so I usually hit the one in Frankfurt, Germany.

Still, it’s pretty slow!

root@devbsd14:~ # git clone https://git.FreeBSD.org/src.git

Cloning into 'src'...

remote: Enumerating objects: 4234853, done.

remote: Counting objects: 100% (381211/381211), done.

remote: Compressing objects: 100% (28321/28321), done.

Receiving objects: 3% (152416/4234853), 48.97 MiB | 1.08 MiB/s

Okay, 1.08 MiB/s is not that bad, but I’m sure we can do better.

How about GitHub?

root@devbsd14:~ # git clone https://github.com/freebsd/freebsd-src/

Cloning into 'freebsd-src'...

remote: Enumerating objects: 4793378, done.

remote: Counting objects: 100% (398/398), done.

remote: Compressing objects: 100% (233/233), done.

Receiving objects: 16% (780550/4793378), 223.95 MiB | 2.13 MiB/s

Okay, 2.13 MiB/s is also not bad, but I have a faster connection than that!

Regardless, I needed just the last state of the code, without the history, so in order to save time and bandwidth I can do

git clone --depth 1 https://git.FreeBSD.org/src.git

And now I can work.

The problem is that this was months ago, and I totally forgot about it.

While I was debugging some issue, I ran git blame and I realized that I can’t see anything older than 3 months. what?

Lucky me, I was able to understand what I did by looking into the shell history.

Okay, so two questions.

- Can I get the rest of the depth/history?

- If GitHub and git.FreeBSD.org is slow, can I setup a local mirror?

Turns out, I had to ask these questions in reverse.

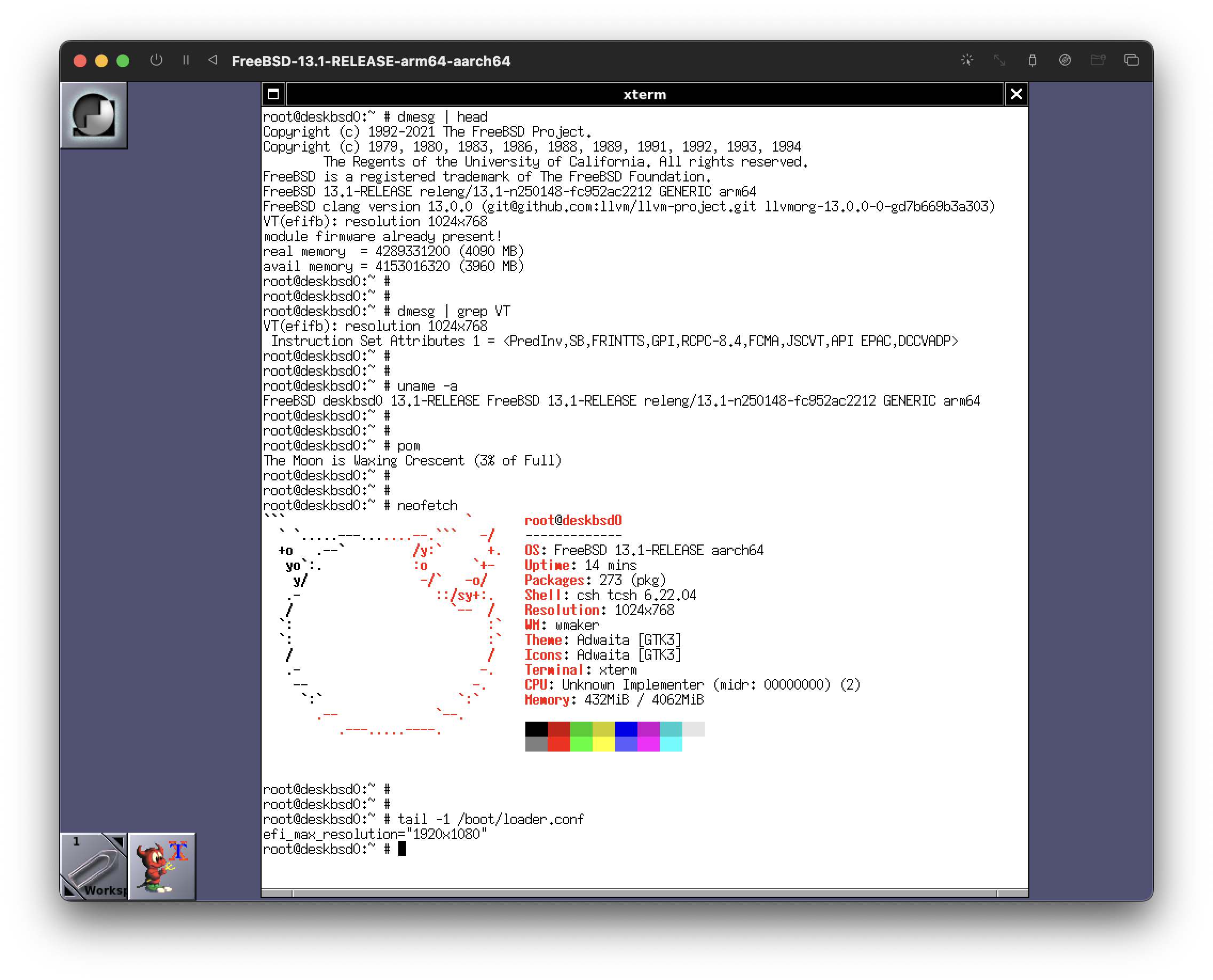

First, I setup a FreeBSD source tree mirror in my home server (which also serves this blog). The connection to that server is fast, the download speed is around 500Mbps, compared to the 50Mbps that I get in this apartment. Yes, I have to apartments, but one of them is only for servers 😀

That was pretty easy to do, I just needed to tell Gitea to mirror https://git.FreeBSD.org/src.git, and in couple of minutes, it was all ready.

Next, I had to make my local checkout… unshallow. After setting up the appropriate remotes, all I had to do was

git pull --unshallow mirror main

and now I have the history all the way back to Jun 12, 1993.

Oh, right! The clone speed test!

root@devbsd14:~ # git clone git@git.bsd.am:antranigv/freebsd-src.git

Cloning into 'freebsd-src'...

remote: Enumerating objects: 4235021, done.

remote: Counting objects: 100% (4235021/4235021), done.

remote: Compressing objects: 100% (824757/824757), done.

Receiving objects: 18% (762304/4235021), 207.13 MiB | 3.53 MiB/s

Okay! now this does use a lot more speed!

Lessons Learned?

- Latency matters! If the distance between my two apartments is $2 worth of commute, while the FreeBSD server is $2000 worth of commute, then my apartments are also close to each other digitally.

- When you do anything non-standard with git (e.g.

--depth=1) make sure to read the docs on how to undo that later.

That’s all folks…